Search

Search

The recent White House “Executive Order [EO] on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” called for “the highest urgency on governing the development and use of artificial intelligence [AI] safely and responsibly, [with] a coordinated Federal Government wide approach.” 1

This overview will clarify the EO’s requirements for government agencies, provide clear guidance for executing the actions specified in the EO, and help you strategically navigate AI’s challenges and risks.

AI is changing how the workforce performs job functions and presents opportunities for federal agencies to meet their mission more effectively. However, adopting AI comes with risk and agencies need to proactively manage the adoption process.

As stated in the October 2023 EO, “Harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks. This endeavor demands a society-wide effort that includes government, the private sector, academia, and civil society.” 2

The purpose of the EO is to ensure the safe, secure, and trustworthy development and use of AI in society. Agencies must put regulations and policies in place to guarantee responsible management of AI adoption. Accordingly, federal agencies need to take several steps to meet the requirements of the EO.

For government agencies, there are several aspects of the EO that are particularly important:

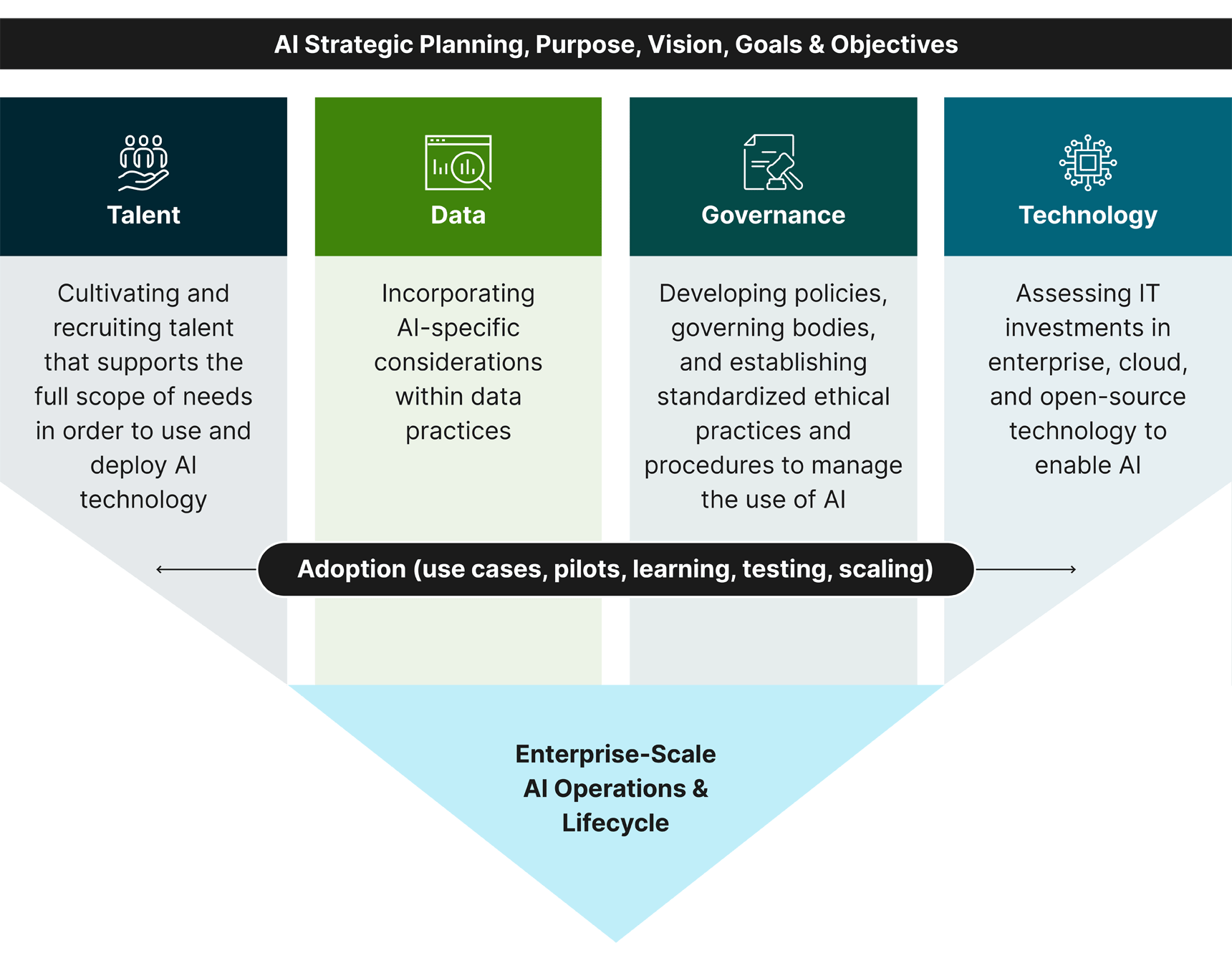

AI adoption is a unique and complex challenge for every organization. From our experience supporting federal agencies with AI adoption, Guidehouse recommends agencies develop a comprehensive AI strategy to establish direction and focus, optimize resources, improve decision-making, and enable long-term sustainability of AI implementation projects. We recommend federal agencies designate a CAIO and develop an AI strategy as the first order of business to address the requirements of the EO. An effective AI strategy should include goals that address the talent, data, governance, and technology domains. With the CAIO and AI strategy in place, agencies should then establish a governance board to execute the strategy and establish AI risk management practices.

Satisfying the EO requirements requires organizational change. This section provides fundamental guidance for addressing each requirement.

Choosing a Chief Artificial Intelligence Officer

The CAIO role will be critical to agencies as they adopt AI and institute the necessary safeguards. The CAIO will need to create the agency’s AI strategy, if one is not already in place, and coordinate with governance bodies and various stakeholder groups on the activities and tasks required to apply the strategy and governance. An ideal candidate for the CAIO position should understand industry-leading practices for creating tools and maturing the data foundation to advance the organization’s AI use. The EO requires the CAIO to execute specific tasks, such as managing the agency’s use case inventory and prioritizing use cases for piloting and implementation.

When selecting the appropriate person for CAIO role, agencies should consider a diverse blend of skills, including strategic planning and understanding technology, risk, and mission requirements; ethical considerations; and workforce needs. The best candidate will be someone who can balance a forward-thinking, innovative perspective with a pragmatic, risk-aware approach. A key outcome of this person’s work will be to help the agency capitalize on the opportunities and benefits that AI presents, while managing risk, as they lead the organization through the process of AI transformation.

Building an AI Governance Board

Agencies should establish an enterprise AI governance board to effectively implement AI policy and establish working groups to accomplish the actions necessary to achieve the AI strategy. The AI governance board must bring together a group of leaders from various disciplines—policy, legal, information technology (IT), human resources (HR), and mission functions—to collaborate on key decisions and support the new CAIO. The board should involve people with a range of perspectives and backgrounds to ensure the inclusion of broad, cross-functional viewpoints, which is necessary to navigate the risks and complexities of AI.

The CAIO and the governance board should work closely together to navigate the ever-changing AI landscape, discuss emerging topics, and triage any issues and challenges as they arise. We recommend using a two-tiered governance model, with an executive board for decision-making and strategic oversight and dedicated working groups to tactically address specific topics and bring recommendations to the executive board as required.

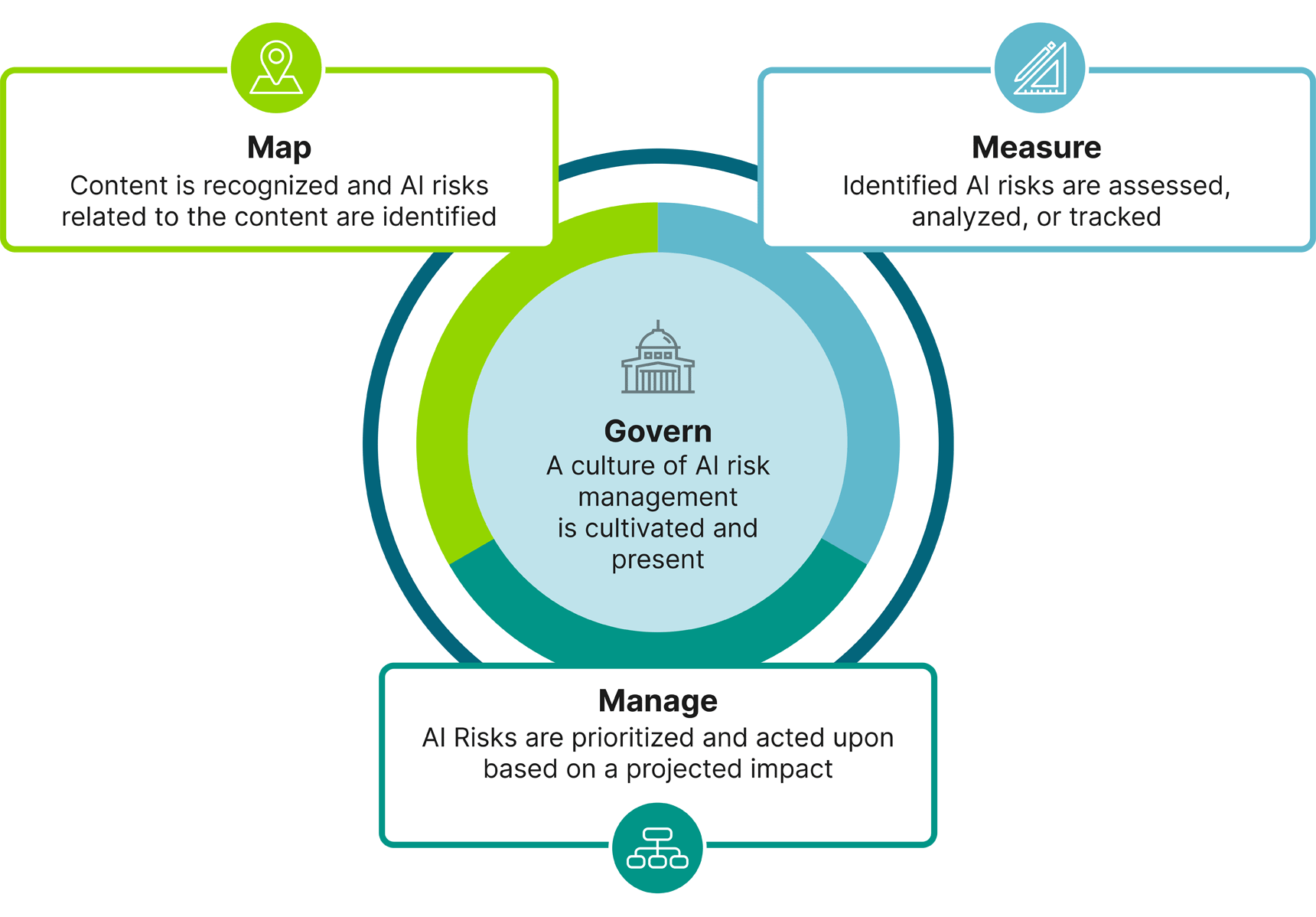

Developing Risk Management Practices

AI comes with a range of risks that will continue to develop as the technology becomes increasingly advanced. These diverse potential concerns include:

To mitigate these risks and ensure responsible, secure use of AI, government agencies should work specifically with the National Institute of Standards and Technology AI risk management framework: 3

Assessing the Agency’s Use Cases

Agencies must have a process in place for collecting, reviewing, reporting, and publishing agency AI use case inventories. As outlined in the EO, each agency must annually submit an inventory of its AI use cases to the Office of Management and Budget (OMB) through the integrated data collection (IDC) process and subsequently post a public version on the agency’s website. Agencies should ensure their IDC processes have access to the proper channels to connect with AI practitioners and collect all AI use cases within the agency. Agencies will need to develop a process to review the use cases for compliance with the principles listed in the EO. Agencies will need to involve stakeholders from multiple disciplines—data, technology, legal disciplines, the CAIO, other responsible AI officials, and agency senior leadership—to develop their AI use cases, submit them to OMB, and publish them.

In the future, agencies will also be required to identify which AI use cases impact safety and rights. They will also need to report additional detail on the risks that come with use and how they are managing them. Agencies must assess the impact, benefits, and risk of each AI use case; test the AI solution; review the results among the CAIO and AI governance board; and report the results and outcomes to OMB as a component of the annual AI use case inventory.

Agencies seeking to successfully adopt AI will need to not only address the above factors, but also apply additional considerations. We recommend the following practices to ensure effective AI implementation.

Establishing Ethical AI Practices — An agency’s AI strategy must incorporate ethical and responsible practices to mitigate legal risk and remain compliant with the EO’s requirements. Leading practices include establishing policy on ethical AI principles and creating a review process for the approval of AI projects.

Implementing Data Governance — The quality and capability of AI models depends on the quality and reliability of the data. Any AI project is just as much a data project, as the data must be up-to-date, accurate, and consistent to make AI effective. Agencies should integrate their data strategy with their AI strategy to coordinate data governance processes and data management practices to improve data quality and build a reliable data foundation.

Enabling AI Use Case Experimentation — Agencies can increase adoption by setting up AI test beds for experimentation using off-the-shelf services from vendors and cloud service providers. The AI test bed leverages a shared infrastructure to equip the workforce with an IT environment where they can learn how to use AI through hands-on experience.

Promoting Skills and Workforce Education — The CAIO must collaborate with HR to identify skill gaps in the current workforce and determine a plan to build capabilities via training and recruitment strategies. Tactical actions include creating and sharing AI position descriptions throughout the organization and developing training.

The emerging challenges of AI are too complex to break down and solve in one short article. The points discussed here will help you approach the first steps. AI is a complex emerging technology, and government agencies need to implement the EO’s requirements with an organized approach. Agencies can navigate this complexity, accelerate adoption, and mitigate risks by partnering with AI experts with proven experience and knowledge of industry-leading best practices.

Guidehouse offers a proven track record from strategy to implementation of AI initiatives, using a holistic approach that integrates people, data, governance, and technology to streamline adoption.

Our established framework supports all facets of AI adoption while leveraging vendor-agnostic tools and methodologies that enable our team to provide transformational solutions.

With expert knowledge of AI governance and risk management frameworks and experience implementing the EO requirements at federal agencies, our team can serve as your ideal guide through this new and continuously evolving AI landscape.

1. Exec. Order No. 14,110 of October 30, 2023. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/.

2. Exec. Order No. 14,110 of October 30, 2023. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/.

3. National Institute of Standards and Technology, “AI Risk Management Framework: Initial Draft,” March 17, 2022, https://www.nist.gov/system/files/documents/2022/03/17/AI-RMF-1stdraft.pdf.

Guidehouse is a global AI-led professional services firm delivering advisory, technology, and managed services to the commercial and government sectors. With an integrated business technology approach, Guidehouse drives efficiency and resilience in the healthcare, financial services, energy, infrastructure, and national security markets.