Search

Search

It is no secret that leveraging AI and Machine Learning (ML) models provides a wealth of benefits to financial institutions (FIs) in their fight against financial crime. In fact, 61% of FIs surveyed in our The Evolving Role of Machine Learning in Fighting Financial Crime study reported that the implementation of AI/ML solutions helped reduce risk.

Beyond general risk reduction, FIs often look to AI/ML solutions to specifically improve the detection and prevention of financial crime, particularly in the Anti-Money Laundering (AML) space. AML monitoring has historically been plagued by ineffective and/or inefficient transaction monitoring (TM) rules, compounded by increasingly sophisticated money laundering schemes. While AI/ML models can bolster financial crime detection and prevention efforts, institutions must ensure that they are built and validated correctly. Incorrectly or incompletely validated models expose companies to the risk of incorrect detection, inconsistent model performance, gaps in monitoring programs, and/or failure to meet regulator expectations. This may help to explain why only 51% of surveyed respondents indicated achieving efficiency gains through the implementation of AI/ML.

Due to the added complexity over “traditional” AML transaction monitoring rules, AI/ML models require specific expertise during both the model development and validation stages. The addition of these types of models to a FI’s monitoring environment requires internal model validation and internal audit oversight. Unfortunately, many financial institutions are not adequately equipped in these areas, leaving them without the correct personnel that are familiar enough with AI/ML modeling in the AML space to develop and execute AI/ML-specific validations and perform internal audits.

While there are currently no AI/ML-specific regulatory requirements, these models will likely soon receive closer regulatory scrutiny. Historically, most model validation approaches have used the Federal Reserve’s SR Letter 11-72 as their basis, which outlines three key components of model validation:

During “traditional” model validation, each of these components receives roughly equal attention, as most rules have straightforward logic and code that lends itself to simple use-case evaluation and easy independent replication of both input and output. However, because of the statistical complexity, dearth of empirical data, and inherent randomness in AI/ML models, replication of code or alert/score output is typically not a valuable exercise. One of the key benefits of using AI/ML, in fact, is the ability to develop models based on average results over dozens or hundreds of iterations, something that is not feasible or even desirable to reproduce as part of validation.

Most AI/ML models use well-tested and generally accepted statistical algorithms as their foundation, providing some inherent level of assurance in the model’s output, but only when the algorithm is appropriate for the identified use case and the inputs are correctly configured. AI/ML model validation should, therefore, place more emphasis on the first component listed above: conceptual soundness.

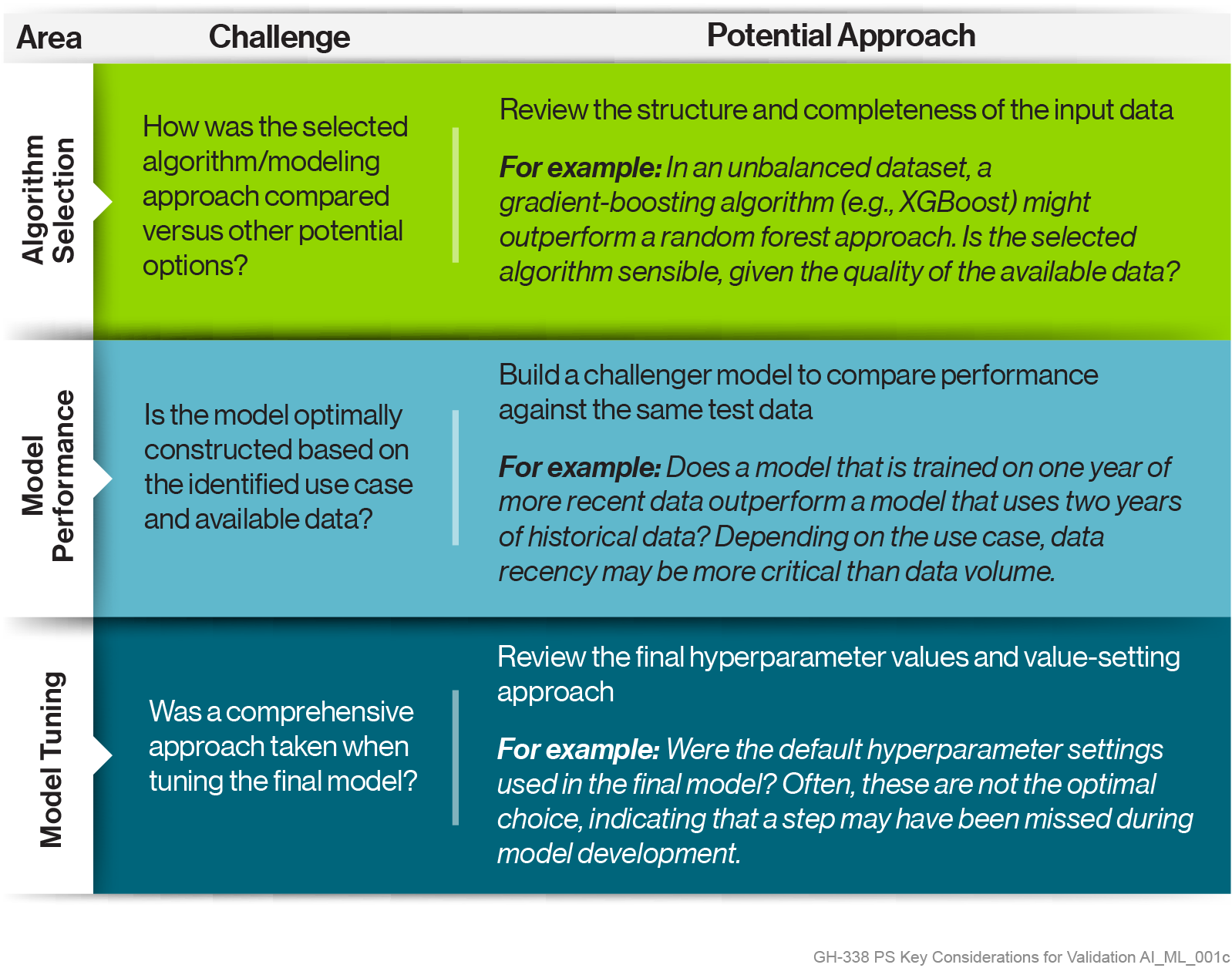

In particular, the feature engineering (the selection, transformation, and testing of included variables) and model tuning processes should receive substantially more attention than is usually given in validation of “traditional” rules. Validation of these areas of AI/ML models should, at minimum:

Backstopping sound design and development, FIs should also have clear model governance and controls in place to ensure that AI/ML models are being correctly reviewed/approved, deployed, monitored, and updated in a consistent, comprehensive manner. Model validation in this area should cover topics such as sampling and/or below-the-line analyses for events cleared by the model, performance monitoring to identify shifts in underlying data or model drift over time, and model review triggers (e.g., for ML alert scoring models, the tuning or calibration of underlying TM rules).

While there is no silver bullet in the fight against financial crime, FIs must continue to adapt their strategies to ensure their AML programs are both effective and efficient. As institutions look to AI/ML to solve existing issues or improve their monitoring environments, they must ensure that their model validation approaches similarly evolve, giving assurance that solutions are developed and implemented properly, adaptable to various changes over time, and provide maximum benefit.

While model validation is a key component of the overall monitoring environment, it is not the only consideration. Our next article in this series will discuss how AI/ML models should be developed to reflect the business or regulatory risks identified for coverage.

Guidehouse is a global AI-led professional services firm delivering advisory, technology, and managed services to the commercial and government sectors. With an integrated business technology approach, Guidehouse drives efficiency and resilience in the healthcare, financial services, energy, infrastructure, and national security markets.