Search

Search

Artificial intelligence (AI) is now woven into the fabric of modern defense and national security operations. Intelligence analysts rely on AI to process vast datasets. Autonomous systems navigate airspace. Command-and-control systems use machine learning to accelerate decision-making.

However, these technological advances introduce new attack surfaces and vulnerabilities. In other words, AI systems themselves have become prime targets for adversarial manipulation.

This threat has evolved beyond academic curiosity and into a mission-assurance risk. Adversarial AI attacks—such as data poisoning, model backdoors, prompt injection, and supply chain compromises—have transitioned from research demonstrations to operational realities. Sophisticated adversaries now actively probe AI vulnerabilities in defense systems, attempting to corrupt intelligence analysis, misdirect autonomous platforms, or inject false patterns into decision support systems.

Peer-reviewed and operational evaluations have continued to show that static defenses are insufficient. Foundational studies demonstrate 100% attack success rates against hardened neural networks.1 Meta-analyses confirm that adversarial techniques routinely exceed 80-90% success rates in controlled environments.2

Perhaps most alarmingly, recent adaptive attacks have bypassed 12 state-of-the-art large language model jailbreak defenses with over 90% success.3

These findings underscore a critical reality: static defenses and one-time security assessments are inadequate against evolving adversarial threats.

Continuous testing, red-teaming, and dynamic hardening are all critical components for protecting national missions and maintaining operational trust. Meeting this challenge requires more than incremental security improvements. It demands a fundamental shift toward lifecycle-based AI resilience that’s aligned with The White House’s 2025 AI Action Plan and Office of Management and Budget memos focused on federal AI governance.

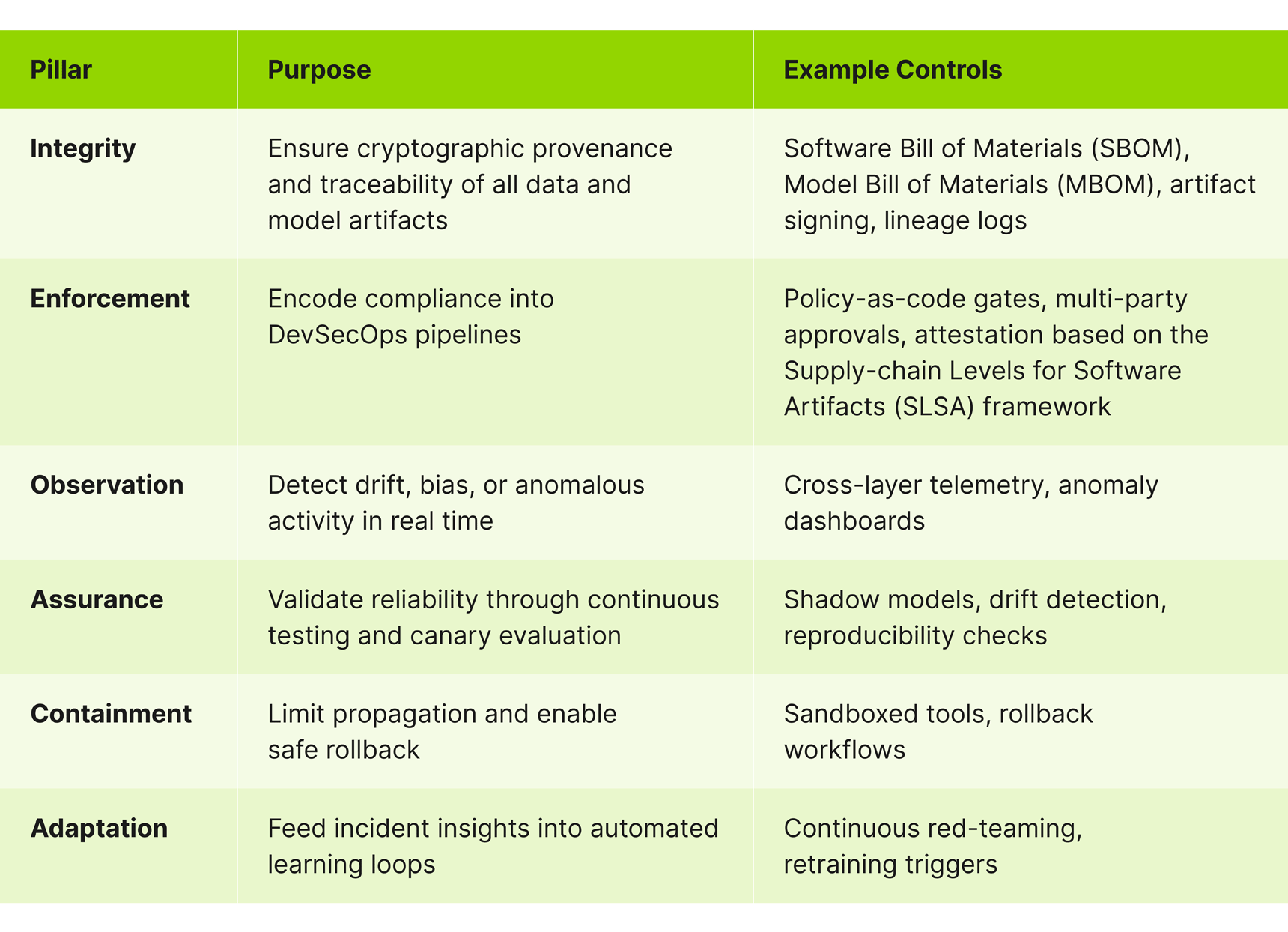

Guidehouse’s lifecycle framework establishes six complementary pillars that embed continuous assurance across the entire AI pipeline.

Transforming AI security requires methodical execution. Our three-phase lifecycle framework balances quick wins with sustainable governance. Each phase builds on the previous one.

The process starts with foundational provenance and policy enforcement controls. The second phase involves scaling monitoring and assurance. The last phase consists of embedding audit readiness and adaptive governance.

Scaling from pilot to the whole portfolio can take anywhere from 6 to 12 months, depending on agency maturity, tooling, and contract constraints.

Strategic leadership priorities

Technology alone can’t secure AI systems. Leadership commitment is essential for sustaining adversarial AI resilience beyond the three-phase plan. Executives must align governance, resources, and accountability mechanisms to institutionalize resilience and drive organizational change through five critical actions:

By treating these leadership actions as extensions of the three-phase rollout plan, agencies can reinforce a repeatable cycle of governance, assurance, and adaptation aligned with federal AI mandates and missionassurance objectives.

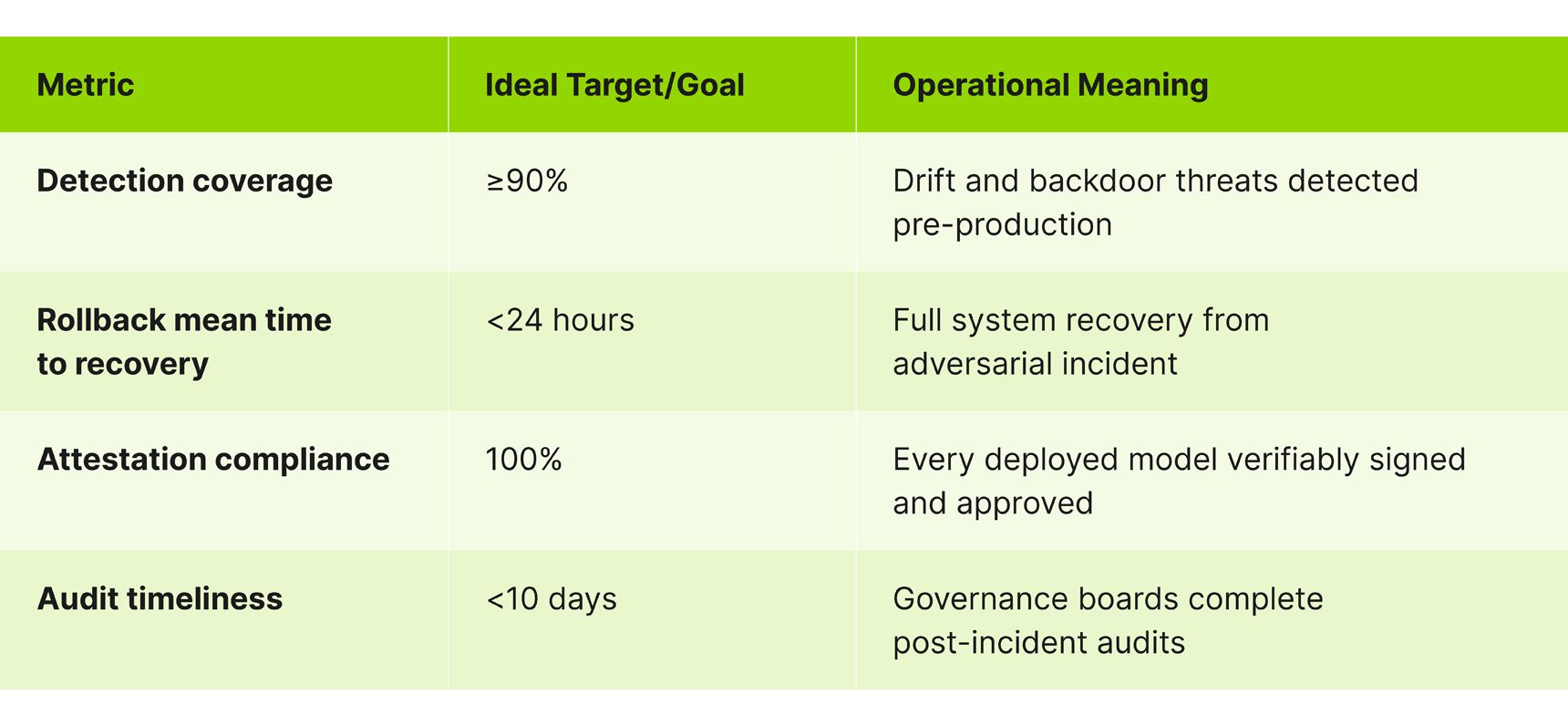

Measurable AI resilience creates effective executive oversight and mission assurance. These readiness indicators provide executive-level metrics for defense and national security programs implementing the lifecycle framework’s six pillars.

Each metric links performance outcomes with governance maturity to give leaders a clear basis for tracking progress and resource decisions.

Adversarial AI represents one of the greatest threats AND one of the greatest opportunities in modern defense technology. AI resilience builds operational trust—and organizations that master it will maintain superiority in contested environments that continue to evolve. Those that don’t risk mission failure when adversaries exploit AI vulnerabilities.

Our lifecycle framework transforms AI security from reactive patching to proactive resilience. By implementing this lifecycle framework, you can detect and contain adversarial threats within hours, not days. Preserving operational integrity under evolving conditions enables you to build measurable, auditable AI governance in alignment with federal mandates.

With committed leadership, systematic implementation, and continuous improvement, AI can serve as a force multiplier for national security—trusted, resilient, and ready for the challenges ahead.

These insights are derived from Guidehouse’s AI Studio, which operates at the intersection of proven expertise and emerging technology. We help you move from idea to impact—quickly, securely, and responsibly—through capability modules designed for reuse, integration, and scalability. Built with human-in-the-loop oversight, these modules combine automation with expert review to ensure accountability, accuracy, and trust.

1. arXiv, “Towards Evaluating the Robustness of Neural Networks.”

2. ACM Computing Surveys, “Adversarial Attacks and Defenses in Deep Learning: From a Perspective of Cybersecurity.”

Guidehouse is a global AI-led professional services firm delivering advisory, technology, and managed services to the commercial and government sectors. With an integrated business technology approach, Guidehouse drives efficiency and resilience in the healthcare, financial services, energy, infrastructure, and national security markets.