Search

Search

Financial fraud has entered a new era. While identity credential theft remains a persistent threat, the new frontier of risk lies in the sophisticated manipulation of the account holder themselves. Today’s most damaging scams are sophisticated social engineering campaigns that coerce legitimate customers into willingly parting with their funds.

Generative AI has amplified this threat. Fraudsters can now produce hyper-realistic deepfake video, voice, and text, dismantling the traditional trust signals. Financial institutions face a critical challenge: balancing seamless customer experiences with the urgent need to intercept sophisticated scams.

To protect customers and the institution itself, a new prevention paradigm is required—one that moves beyond simple rule-based defenses to deeply understand the context and behavior behind every interaction.

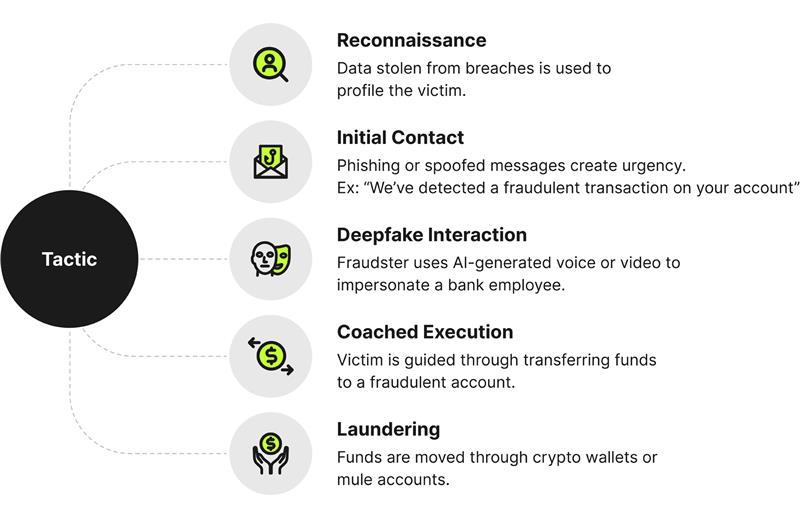

Understanding the mechanics of a modern fraud attack is essential to building an effective defense. Consider the modern Authorized Push Payment (APP) scam—a multi-stage attack that blends technology and psychological manipulation. It’s responsible for billions in losses annually.

It begins when a scammer uses stolen data from a non-financial breach—such as those involving hotel chains, loyalty programs, or social media platforms—to construct a detailed profile of their target. Then, initial contact is made via a phishing technique to create a sense of urgency by using phrases like "A fraudulent transaction has been detected on your account." The victim is then prompted to call a number or click a link that connects them to the fraudster posing as a bank employee.

This is where Generative AI becomes a powerful tool for deception. An impersonator can use a deepfake voice, trained on publicly available audio to build credibility. They "verify" the victim’s identity using the stolen information, solidifying their trust. The victim is informed of the “compromise” and told to transfer their funds to a new, "secure" account provided by the perpetrator to protect the funds.

The distressed victim logs into their own banking application using standard authentication checks, while the perpetrator stays on the line, coaching them step-by-step through adding the new fraudulent account as a payee and executing the transfer.

From the bank’s perspective, everything appears routine: a known user on a known device, logging in correctly and authorizing a payment. By the time the victim realizes they’ve been deceived, the money is gone—often laundered through a chain of crypto wallets or mule accounts, making recovery nearly impossible

This scenario highlights the core challenge: the transaction itself was authorized. Traditional fraud systems, looking only at credentials and transaction details in isolation, are often blind to the manipulation that preceded it.

As financial scams evolve from credential theft to direct manipulation of a target, the question of liability has become increasingly complex. In traditional fraud scenarios—where unauthorized access is gained through stolen credentials—banks typically assume responsibility for reimbursing customers. However, in the case of APP scams, where the customer is deceived into authorizing the transaction themselves, the liability landscape shifts in the following ways:

The illusion of consent: APP scams prey on customer trust and urgency, often using deepfakes and spoofed communications to impersonate bank officials. Legally, because the transaction is technically authorized by the account holder, banks may argue that liability rests with the consumer. This creates a challenging paradox: the more sophisticated and convincing the scam, the more likely the victim is held responsible.

Duty of care and detection: Financial institutions are under growing scrutiny for failing to prevent scams—even when the customer provides authorization. Regulators and consumer advocates argue that banks have a duty of care to flag suspicious activity, especially when behavioral anomalies or contextual red flags arise. For instance, a sudden high-value transfer to a new payee under unusual login conditions should trigger closer inspection.

Model of shared accountability: Liability in scam scenarios is shifting toward a model of shared accountability. Consumers must remain vigilant, while banks are expected to invest in intelligent fraud detection systems and transparent communication protocols. The most resilient institutions will be those that not only detect scams but also empower customers to recognize and resist manipulation.

Increasingly, forward-thinking financial institutions treat scam prevention as a differentiating pillar of their customer-protection strategy, deploying both new technology and awareness campaigns, reinforcing trust and deepening long-term relationship value.

To identify blind spots, develop a comprehensive, 360-degree digital profile of each customer. This is not just about verifying a user at the front door—it's about continuously recognizing their digital DNA throughout their entire journey.

This foundation is built on two key principles:

This enriched context transforms a simple transaction into a detailed narrative— helping you detect coached, fraudulent behavior.

For example, when the APP scam victim adds the new payee, a unified system would see that the account number has no history with the customer, the device has a new browser fingerprint, and the beneficiary account has received multiple payments from other victims in the last few days. None of these are definitive proof of a scam on their own—but together they paint a picture of high-risk signals and provide you an opportunity to intervene.

From reactive to predictive: Behavioral analytics and threat intelligence

Once you’ve built a rich, contextual data foundation, you can deploy advanced analytics to proactively detect manipulation. This is where your defense moves from validating credentials to analyzing intent.

Behavioral anomaly detection, powered by AI models helps you establish a baseline of normal activity for each user. The models learn the typical sequence and timing of a user's actions. A legitimate user might browse their balance, check a statement, and then set up a payment to an expected recipient. A coached scam victim, on the other hand, exhibits a different rhythm. They log in, immediately navigate to the payment screen, copy and paste a long, unfamiliar account number (itself a red flag, as genuine users often type numbers manually or select from a list), and attempt to transfer an unusually large percentage of their balance or something near the daily transfer threshold.

The model calculates a real-time risk score—tuned to deviations from each user’s behavioral baseline.

Proactive threat intelligence can take this further—when your system checks the fraudster-provided payee account, an integrated threat feed might reveal that this account is on an industry-shared watchlist for mule activity or shared on private Discord servers for scammers. This external intelligence provides powerful confirmation, allowing you to move from suspicion to certainty and intervene before the funds are lost.

Detection is just the start. Your response must be intelligent and adaptive. A one-size-fits-all approach, like blocking every transaction involving stolen credentials, creates unnecessary friction and frustrates legitimate customers. Instead, you should apply a spectrum of intervening friction that is directly proportional to the calculated risk.

Low risk: The transaction proceeds seamlessly, with no direct interruption to the user experience other than a notice to make sure safe payment practices are followed

Medium risk: Introduce a "step-up" challenge or notice. Instead of blocking the transaction, send a secure push notification to the user’s trusted device with specific, clear language: "You are about to send $5,000 to a new payee. We have detected unusual activity on your account. Are you certain you want to proceed?" This moment of intervening friction can be enough to break the fraudster's influence and make the victim pause and reconsider.

High risk: Temporarily hold the transaction and trigger a more robust authentication. To counter a potential deepfake attack, this could involve a liveness check—requiring the user to perform a specific action like reading a random phrase in a live video feed. In more extreme cases, the transaction is stopped, a safety notice is sent to the customer, and an alert is sent to your in-house fraud investigation team for immediate follow-up.

This layered response helps mitigate scams while minimizing disruption to legitimate customers—preserving trust and satisfaction.

Fighting scams is not a one-time initiative—it is a continuous cycle of learning, adaptation, and improvement. You can accelerate your capabilities and ensure long-term resilience by fostering a collaborative partnership with fraud technology experts. This isn't about outsourcing responsibility—it's about augmenting in-house teams with specialized expertise in data science, behavioral analytics, and emerging fraud tactics.

A successful partnership focuses on co-creating a strategic roadmap, implementing and fine-tuning the right technology stack, and establishing a framework for continuous monitoring and model improvement. The measure of success extends beyond just reducing fraud losses. It includes lowering false positive rates to improve customer experience, increasing the efficiency of fraud investigation teams, and ultimately, strengthening the customer’s trust that their institution is truly looking out for their financial well-being.

Guidehouse is a global AI-led professional services firm delivering advisory, technology, and managed services to the commercial and government sectors. With an integrated business technology approach, Guidehouse drives efficiency and resilience in the healthcare, financial services, energy, infrastructure, and national security markets.